Red Hat Linux Containers: Not Just Recycled Ideas

Some people accuse Red Hat of dusting off an old idea, Linux containers, and presenting them as if they were something new. Well, I would acknowledge Sun Microsystems offered containers under Solaris years ago and the concept isn't new. But Docker and Red Hat together have been able to bring new packaging attributes to containers, making them an alternative that's likely to exist alongside virtual machines for moving workloads into the cloud.

And containers promise to fit more seamlessly into a DevOps world than virtual machines do. Containers can provide an automated way for the components to receive patches and updates -- without a system administrator's intervention. A workload sent out to the cloud a month ago may have had the Heartbleed vulnerability. When the same workload is sent in a container today, it's been fixed, even though a system administrator did nothing to correct it. The update was supplied to an open-source code module by the party responsible for it, and the updated version was automatically retrieved and integrated into the workload as it was containerized.

[Want to learn more about Linux containers? See Red Hat Announces Linux App Container Certification.]

That's one reason why Paul Cormier, Red Hat's president of products and technologies, at the Red Hat Summit this week, called containers an emerging technology "that will drive the future." He didn't specifically mention workload security, rather, he cited the increased mobility a workload gains when it's packaged inside a container. In theory at least, a containerized application can be sent to different clouds, with the container interface navigating the differences. The container checks with the host server to make sure it's running the Linux kernel that the application needs. The rest of the operating system is resident in the container itself.

Is that really much of an advantage? Aren't CPUs powerful enough and networks big enough to move the whole operating system with the application, the way virtual machines do? VMware is betting heavily on the efficacy of moving an ESX Server workload from the enterprise to a like environment in the cloud, the vCloud Hybrid Service. No need to worry about which Linux kernel is on the cloud server. The virtual machine has a complete operating system included with it.

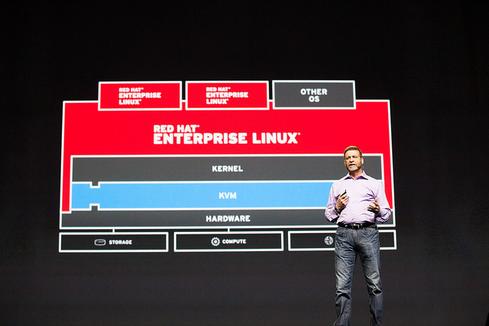

Paul Cormier at Red Hat Summit 2014.

(Source: Red Hat)

(Source: Red Hat)

But that's one of the points in favor of containers, in my opinion. Sun used to boast how many applications could run under one version of Solaris. In effect, all the containerized applications on a Linux cloud host are sharing the host's Linux kernel and providing the rest of the Linux user-mode libraries themselves. That makes each container a smaller-sized, less-demanding workload on the host and allows more workloads per host.

Determining how many workloads per host is an inexact science. It will depend on how much of the operating system each workload originator decided to include in the container. But if a disciplined approach was taken and only needed class libraries were included, then a host server that can run 10 large VMs would be able to handle 100 containerized applications of similar caliber, said Red Hat CTO Brian Stevens Wednesday in a keynote at the Red Hat Summit.

It's the 10X efficiency factor, if Stevens is correct, that's going to command attention among Linux developers, enterprise system administrators, and cloud service providers. Red Hat Enterprise Linux is already a frequent choice in the cloud. It's not necessarily the first choice for development, where Ubuntu, Debian, and Suse may be used as often as Red Hat. When it comes to running production systems, however, Red Hat rules.

Red Hat has produced a version of Red Hat Enterprise Linux, dubbed Atomic Host, geared specifically to run Linux containers. Do we need another version of RHEL? Will containers really catch on? Will Red Hat succeed in injecting vigor into its OpenShift platform for developers through this container expertise?

We shall see. But the idea of containers addresses several issues that virtualization could not solve by itself. In the future, containers may be a second way to move workloads into the cloud when certain operating characteristics are sought, such as speed of delivery to the cloud, speed of initiation, and concentration of workloads using the same kernel on one host.

Can the trendy tech strategy of DevOps really bring peace between developers and IT operations -- and deliver faster, more reliable app creation and delivery? Also in the DevOps Challenge issue of InformationWeek: Execs charting digital business strategies can't afford to take Internet connectivity for granted.

Charles Babcock is an editor-at-large for InformationWeek, having joined the publication in 2003. He is the former editor-in-chief of Digital News, former software editor of Computerworld and former technology editor of Interactive Week. He is a graduate of Syracuse ... View Full Bio