By Nicholas Barrowclough, President of AKCP

Business operations, banking, education, and our personal lives are reliant on a digital infrastructure. In-fact, you would find it difficult to identify an area of our modern lifestyles that is not touched by this. But, what is behind that digital infrastructure? Data centres serve as the backbone of today's interconnected world. Housing servers, storage systems and networking equipment, they power everything from online shopping to streaming services, and our appetite is insatiable. There is an ever increasing demand for data storage and processing. But all of this has led to a growing challenge. How do you maintain optimal performance, maximum uptime and high availability while also minimising the environmental impact data centres are having? The answer to this challenge lies in the changes being brought about to revolutionise data centre cooling technologies.

In 2022, 1-1.3% of the total energy consumption in the world went to data centers. That amounts to an incredible 240-340 Terawatt Hours (TWH). The average hyperscale data centre consumes 20-50mW of power per annum. That’s enough energy to power up to 37,000 homes. What is more, global data centre energy usage is expected to quadruple by 2030.

All of this has brought data centres under the scrutiny of government regulators. In some areas there simply isn’t enough power to build anymore data centres. Already Singapore and Ireland have taken steps to control data centre energy and water usage. They have implemented a moratorium on new builds and imposed strict requirements on existing infrastructure. Germany has tabled the idea of enshrining in law a mandate that data centres must meet a PUE of 1.2 by 2026. It also makes a legal requirement for waste heat reuse, demanding that by 2028 at least 20% of waste heat is made available for reuse.

The debate is not only about power, data centres consume vast quantities of water in their cooling systems. A typical hyperscale can consume 3-5 million gallons of water per day. This is comparable to the daily water use of a city population around 30-40,000 people. Research has shown that a typical AI Chatbot conversation, of 20-50 questions consumes 500ml of water. That may not sound like a lot, but factor in billions of users and it starts to add up pretty quickly.

In the summer of 2023 Spain was in the grips of a drought, with families under water quotas, not allowed to water their gardens. Even farmers were impacted, with insufficient water for crops. The grain basket of Spain, the Castilla la Mancha region, was expected to lose between 80-90% of the 2023 harvest due to drought. Yet, in the middle of this area, Meta is planning a hyperscale data centre that will consume 665 million litres of water per year.

In London, Thames Water are looking at businesses that are water intensive and adjusting their pricing models. Uptime institute states that only 39% of data centres actually track their water usage. The big players in the hyperscale industry, Amazon, Google and Microsoft have started publishing their water usage after claiming it’s a trade secret for years. All of this because of the governments, and publics increasing demand for accountability and transparency from the industry on their environmental impact. Water usage has become such an issue that an industry standard metric known as Water Usage Effectiveness (WUE) has been coined, to go alongside Power Usage Effectiveness (PUE).

A key strategy on the pathway to energy efficient and sustainable, eco friendly data centres is the development and adaptation of advanced cooling technologies. We are reaching the limits of what forced air cooling is able to achieve and now methods utilising direct to chip cooling and submersion cooling, where servers are immersed in a non-conductive dielectric fluid are efficient, but expensive and disruptive to install. However, the fact is, water conducts heat 3,000 times better than air, and with high intensity computer applications such as AI, it will become practically impossible to cool using air. The US Department of Energy (DofE) has already invested US$40 million in the research and development of liquid cooling technologies under their “coolerchips” program. Many of the projects under this program are focusing on the direct to chip cooling methods.

One such company is JetCool, who are focusing on delivering cold water via micro jets specifically targeting the areas of the chips where most of the heat is generated. Their self contained system eliminates the need for any evaporative cooling, saving up to 90% of annual water usage. The JetCool solutions deployed at colocation data centres have shown 30% reduction in chip temperatures, and 14% reduction in power consumption.

In addition to the above solutions, other technologies such as air-side and water-side economisers are being embraced by data centre designers and operators. These are leveraging external air and water sources to cool data centre equipment. By harnessing the natural environment, data centres can drastically decrease their energy consumption.

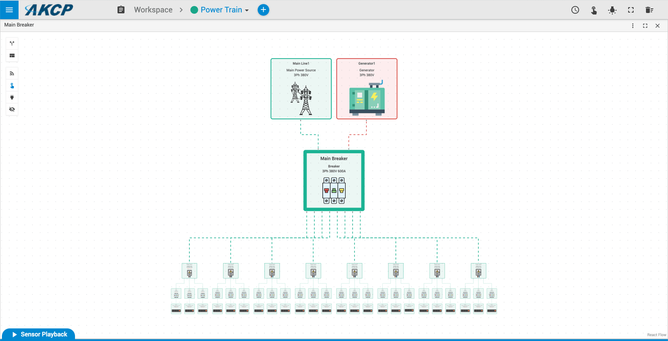

Software solutions that can aid in data centre energy reduction include data centre infrastructure management (DCIM) software that integrates data driven analytics and AI. These real-time monitoring systems can lead to more energy efficient cooling strategies. Predictive analysis of data centre operations, monitoring of loads, anticipating temperature fluctuations all allow for proactive adjustments that optimise cooling systems and energy use.

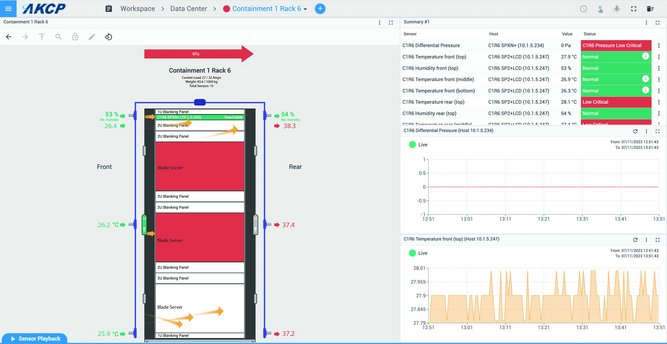

Operating data centres at elevated temperatures, closer to the upper limits of ASHRAE recommended specifications is another method to reduce energy consumption of the associated cooling systems. However, this can be a risky game, as there is less margin for error. In this case, a comprehensive monitoring system that can detect hotspots at rack level needs to be deployed. AKCP thermal map sensors are ideal for this, providing temperature at the front and rear of the rack, top middle and bottom, ∆T top middle and bottom, and humidity. The inclusion of a power metre as well allows for sensor backed CFD analysis to be conducted, sensorCFD. The generated model aids in verifying data centre performance in accordance with the CFD principles used during the design stage of the data centre facility.

The implementation of solutions, such as those from AKCP, allow data centre operators to streamline their facilities and increase efficiency, which in turn results in lower OpEx and reduced carbon footprint. Heatmaps of the facilities created with sensor data identify not only hot spots but cold spots. Traditionally temperature monitoring for the data centre has been thought of as a way to prevent servers overheating. However, with the shift to energy savings, cold spots signify wasted cooling energy. Therefore the idea that sensors are an insurance policy for when things go wrong is transitioning to them being an asset that saves operators money.

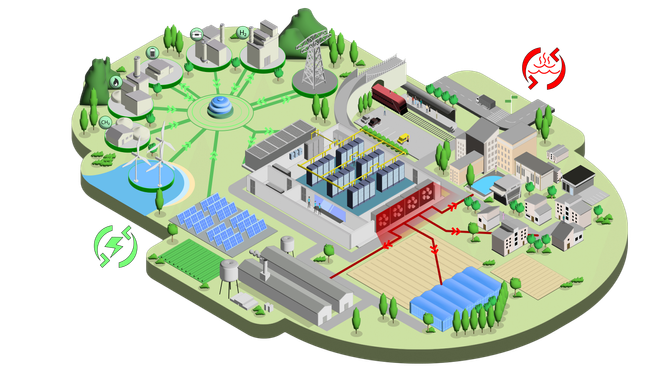

In conclusion, the data centre industry is undergoing a transformation in its approach to how data centres are cooled. Investing in the research and development of new technologies, as well as embracing them in the design. Implementing innovative design practices, employing data analytics and looking at renewable energy sources. It is certainly a challenge to be under pressure to reduce carbon footprint while meeting the ever growing demands of the digital age.

But with some of the world's greatest minds turning their attention to the challenge, there is surely a pathway to an energy efficient and sustainable future for the data centre industry.

This article is an advertorial and monetary payment was received from AKCP. It has gone through editorial control and passed the assessment for being informative.

https://datacentremagazine.com/articles/transforming-data-centre-cooling-for-a-sustainable-future