PRTG Cluster Basics

One of the major new features in version 8 of PRTG Network Monitor is called “Clustering”. A PRTG Cluster consists of two or more installations of PRTG Network Monitor that work together to form a high availability monitoring system.

The objective is to reach true 100% percent uptime for the monitoring tool. Using clustering, the uptime will no longer be degraded by failing connections because of an Internet outage at a PRTG server’s location, failing hardware, or because of downtime due to a software upgrade for the operating system or PRTG itself.

How a PRTG Cluster Works

A PRTG cluster consists of one “Primary Master Node” and one or more “Failover Nodes”. Each node is simply a full installation of PRTG Network Monitor which could perform the whole monitoring and alerting on its own. Nodes are connected to each other using two TCP/IP connections. They communicate in both directions and a single node only needs to connect to one other node to integrate into the cluster.

Normal Cluster Operation

Central Configuration, Distributed Data Storage, and Central Notifications.

During normal operation the “Primary Master” is used to configure devices and sensors (using the Web interface or Windows GUI). The master automatically distributes the configuration to all other nodes in real time. All nodes are permanently monitoring the network according to this common configuration and each node stores its results into its own database. This way also the storage of monitoring results is distributed among the cluster (the downside of this concept is that monitoring traffic and load on the network is multiplied by the number of cluster nodes, but this should not be a problem for most usage scenarios). The user can review the monitoring results by logging into the Web interface of any of the cluster nodes in read only mode. As the monitoring configuration is centrally managed, it can only be changed on the master node, though.

If downtimes or threshold breaches are discovered by one or more nodes only the primary master will send out notifications to the administrator (via email, SMS, etc.). So, the administrator will not be flooded with notifications from all cluster nodes in the event of failures. BTW, there is a new sensor state “partial down” which means that the sensor shows an error on some nodes, but not on all.

Failure Cluster Operation

- Failure scenario 1

If one or more of the Failover nodes are disconnected from the cluster (due to hardware or network failures) the remaining cluster continues to work without disruption. - Failure scenario 2

If the Primary Master node is disconnected from the cluster, one of the failover nodes becomes the new master node. It takes over control of the cluster and will also manage notifications until the primary master reconnects to the cluster and takes back the master role.

Sample Cluster Configurations

There are several cluster scenarios possible in PRTG.

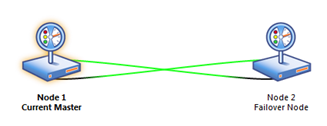

- Simple Failover. This is the most common usage of PRTG in a cluster. Both servers monitor the same network. When there is a downtime on Node 1, Node 2 automatically takes over the Master role until Node 1 is back online.

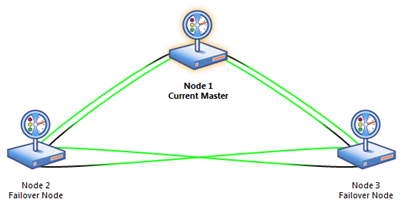

- Double Failover. This is a very advanced Failover cluster. Even if two of the nodes fail, the network monitoring will still continue with a single node (in Master role) until the other nodes are back online.

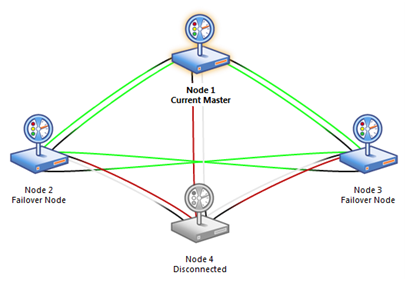

- The following four-node-scenario shows one node in disconnected mode. The administrator can disconnect a node any time for maintenance tasks or to keep a powered off server on standby in case another node’s hardware fails.

Usage Scenarios for the PRTG Cluster

PRTG’s cluster feature is quite versatile and covers the following usage scenarios.

Failover LAN Cluster

PRTG runs on two (or more) servers inside the company LAN (i.e. closely to each other in a network topology perspective). All cluster nodes monitor the LAN and only the current master node will send out notifications.

Objectives:

- Reaching 100% uptime for the monitoring system (e.g. to control SLAs, create reliable billing data and ensure that all failures create alarms if necessary).

- Avoiding monitoring downtimes

Failover WAN or Multi Location Cluster

PRTG runs on two (or more) servers distributed throughout a multi segmented LAN or even geographically distributed around the globe on the Internet. All cluster nodes monitor the same set of servers/sensors and only the current master node will send out notifications.

Objectives:

- Creating multi-site monitoring results for a set of sensors;

—and/or— - Making monitoring and alerting independent from a single site, datacenter or network connection.

PRTG Cluster Features

- Paessler’s own cluster technology is completely built into the PRTG software, no third party software necessary

- PRTG cluster features central configuration and notifications on the cluster master

- Configuration data and status information is automatically distributed among cluster members in real time

- Storage of monitoring results is distributed to all cluster nodes

- Each cluster node can take over the full monitoring and alerting functionality in a failover case

- Cluster nodes can run on different operating systems and different hardware/virtual machines; they should have similar system performance and resources, though

- Node-to-node communication is always secure using SSL-encrypted connections

- Automatic cluster update (update to a newer PRTG version needs to be installed on one node only, all other nodes of the cluster are updated automatically)

What is Special About a PRTG Cluster (Compared to Similar Products)

- Each node is truly self-sufficient (not even the database is shared)

- Our cluster technology is 100% “home-grown” and does not rely on any external cluster technology like Windows Cluster, etc.